January 22 + 23, 2019.

Java programs begin execution starting from a main method

public static void main (String[] args) {...}.

Primitives vs. Non-Primitives We keep track of data using variables. Variables come in two flavors: primitives and non-primitives.

Primitives in Java include only the following: byte, short, int, long, float, double, boolean, char. All other kinds of variables are

non-primitives. Variables representing primitives store the appropriate raw value, while variables representing non-primitives store

references to the actual data.

The creators of Java made this design decision for the language due to efficiency justifications. Imagine that you're in at an interview for your dream job. The manager really loves you and is almost ready to hire you, but first, they'd like to ensure that everything you've said about yourself is true. And so, the manager would like to verify with your best friend that you have indeed been truthful. But now the question is, would it be appropriate for you to clone your best friend so that the manager can chat with them? Probably not...your friend might not appreciate that very much. It makes much more sense to provide the manager with a

reference to your friend, a phone number perhaps. This is why Java handles "complicated" (non-primitive) variables using references.

This goes hand-in-hand with what Professor Hug calls the

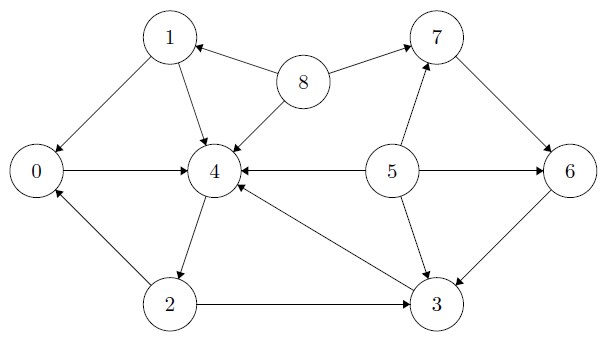

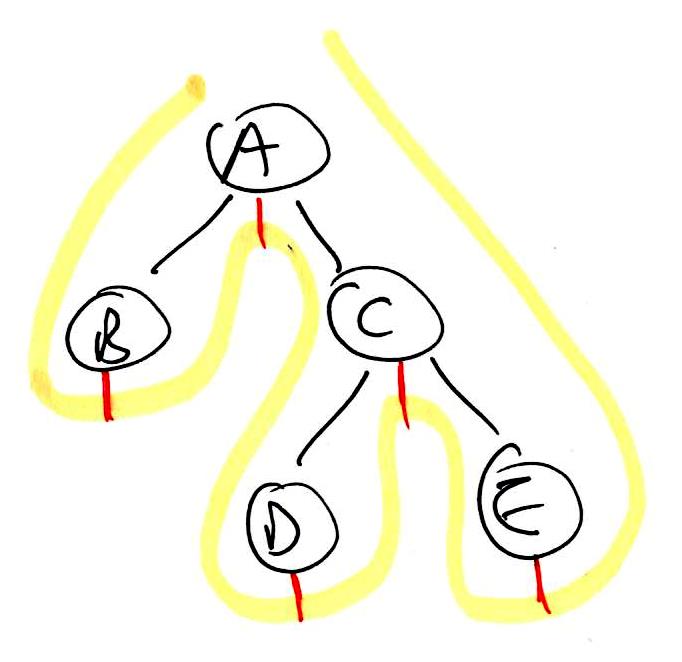

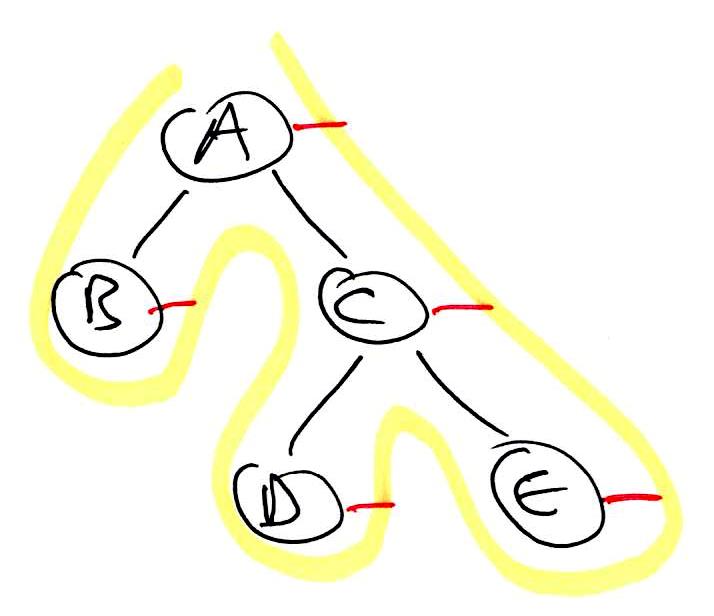

Golden Rule of Equals. When we are drawing

box and pointer diagrams, when a variable's contents are asked of, we provide whatever is inside that variable's corresponding box. This means that for primitives we copy the raw value, while for non-primitives we copy the arrow that we have drawn, such that our copied arrow points to the same destination. Given this distinction, if a function takes in both a primitive and a non-primitive as arguments, and it mangles both during the function call, when the function is over, which of the two (or both?) were actually changed? See the example below to find out:

Iteration and Recursion Often times, we want to write code that does something over and over again. We have two techniques for doing this: iteration and recursion.

Iteration involves using a loop. In Java, one kind of loop is the

while loop. To evaluate it, we follow these steps in order:

- Evaluate the loop condition. If it is false the loop is over and execution continues starting after the closing curly bracket of the loop.

- If it is true, then we start at the top of the loop's body of code and execute those lines in order until we reach its end.

- At this point, we go back to step 1 and check the condition again.

It is important to note that even if the condition becomes false in the middle of executing the loop body, the loop does not magically quite immediately. The loop can only quit in between executions of its body (unless there is a

break statement, an

Exception is thrown, etc.). Another kind of loop is the

for loop. This kind of loop was introduced because programmers noticed that loops in general typically contained the initialization of some counter variable, the check of some condition against that counter variable, and the modification of this counter variable as iterations occur. For loops simply bundle these three elements together into a visually convenient place. We evaluate for loops following these steps in order:

- Initialize the counter variable.

- Evaluate the loop condition. If it is false the loop is over and execution continues starting after the closing curly bracket of the loop.

- If it is true, then we start at the top of the loop's body of code and execute those lines in order until we reach its end.

- Adjust the counter value according to the specified fashion. Usually an incrementation or decrementation.

- At this point, we go back to step 2 and check the condition again.

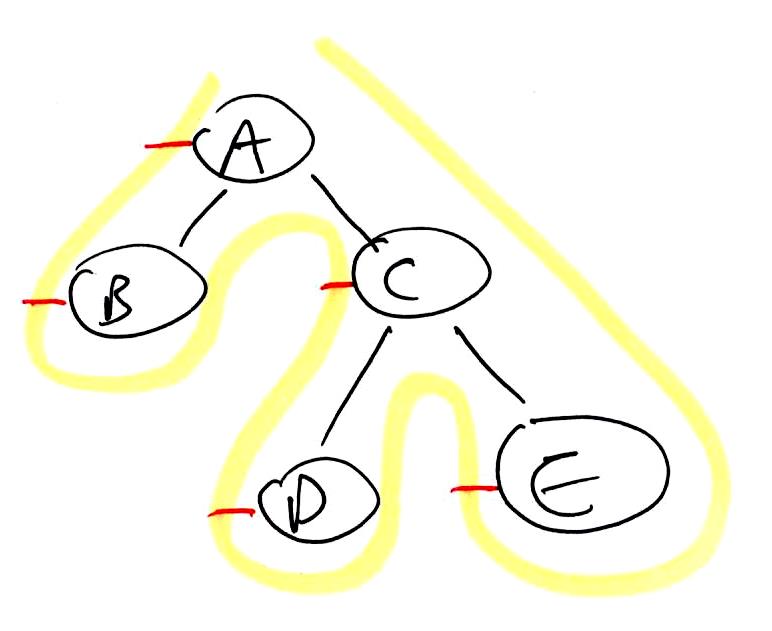

The other option we have is

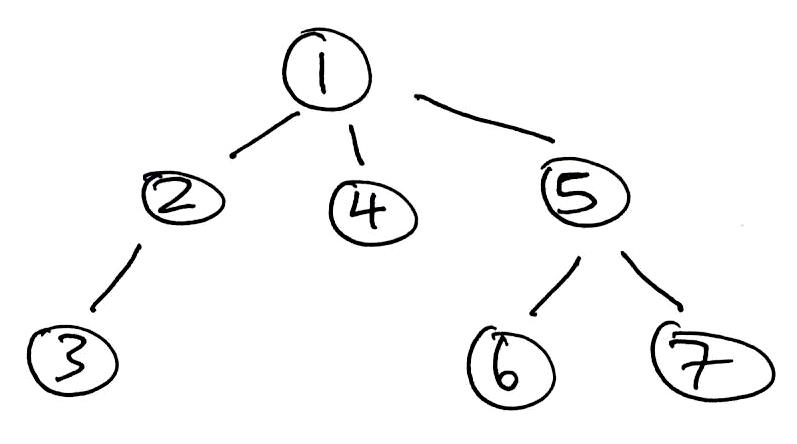

recursion. This occurs anytime we have a function and that function's body of code contains a statement in which it calls upon itself again. To prevent infinite recursion, we want our recursive functions to contain

base cases, which are if statements that provide direct results of the simplest situations, and in all other cases our recursive calls should aim to move our complicated situation closer and closer to one of our base cases.

With the classic Fibonacci exercise (#3 on the worksheet), we saw how there may be many ways to solve the same problem and to arrive at the correct answer. Therefore, simply solving a problem one way is cute and all, but in the real world, that doesn't mean you're done--we want to find the "best" solution. What does "best" mean? It means code that is not only correct, but also fast, memory efficient, and clear to other human readers. In other words, in this class we will learn to consider time efficiency, space efficiency, and style/documentation.